Friday, December 14, 2007

Latest Lego session was very good

Wednesday, August 29, 2007

Meetings 012 through 014

There will be more to point out later, but the slide show (click here to view the video) speaks relatively well about what we have been doing the past three meetings.

We have been tweaking the mechanism slightly to add stability and a more professional look, but most of what we have done is work on the color detection algorithms.

We did a major refactoring on week 13, changing a high-low match with a +/- "fudge factor" to a waterfall design where a maximum value for each color is evaluated before the color is determined or passed on to the next color condition. (Hope that's enough detail. I will try to get an NQC sample up in the next few days.)

Thursday, August 2, 2007

Meeting 011

Meeting 11 was puzzling. We began by disconnecting all the cables and using electrical contact cleaner to clean them. As the sensors are analog in the old-style RCX, bad contacts can affect the results.

As we ran through our testing refinement, we discovered that every color we tested had exactly the same values.

We had errors of incorrect cabling, quickly fixed, and weird problems with the extra light not being on at all. As it turned out, all the readings we were getting were exactly the same.

Had we inspected the sensor assembly earlier in the meeting, we would have noticed this light and the sensor were not coming on, and easily fixed it. Another hard lesson in working with hardware.

Laura continued to revise the detection program. One key missing ingredient, talked about 10 weeks ago and easily forgotten, was the need to verify if an M&M fell into the range of being yellow or orange, and then run a second test with the auxiliary light on to determine which color it was.

Saturday, July 28, 2007

Meeting 010 Follow-up

Ron and I had a little bit of free time over lunch today, so we got together and continued the work we started at yesterday's meeting. I've fleshed out the code, so the 'bot now:

- Goes to the sensor array

- Takes two readings, one lit and one unlit

- Figures out the color of the candy based on those readings (well, not really -- more on this in a minute)

- Goes to the correct bin (ditto)

- Turns the bucket one full turn, dumping the candy into the bin and resetting the bucket for the next trip to the to-be-created hopper

- Returns to the starting point

Unfortunately, we've run into a snag. The readings that we get vary widely, even with the same particular piece of candy. The value returned seems to be affected by bucket position (Ron fiddled with that, and we think we have the candy centered right under the sensor now, but we had to add a "bumper" to do it), candy orientation, candy size, and who knows what else.

Naturally, this means the software doesn't work -- it can't tell red from yellow, for instance, and given the extreme variation in readings, it's hard to know how to change the code to compensate. Ron came up with an interesting idea -- putting the sensor at the bottom of the bucket. This would eliminate the size and orientation variables, and make the bucket position moot -- it would just have to be positioned under something that blocks out ambient light.

Sometime next week, or at next week's meeting, we'll need to experiment to see if that would actually work -- information we need before we do a major bucket redesign.

Thursday, July 26, 2007

Meeting 010

We had a very successful time today, although once again work and real life limited the number of participants -- it was only me, Ron, and Kimberly.

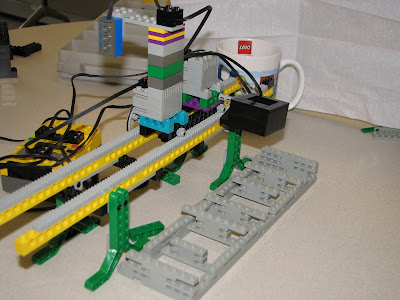

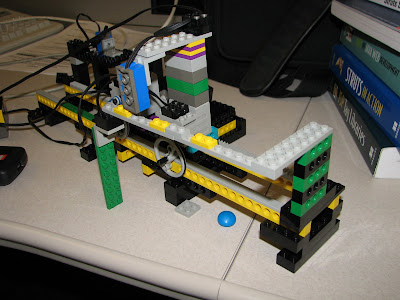

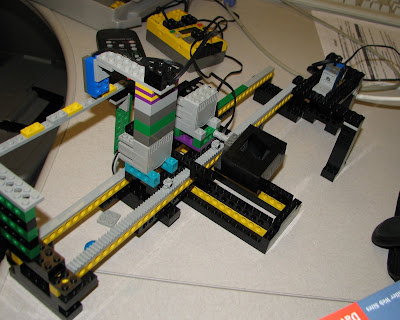

Due to the absence of our Fearless Leader, there are no pictures of the session itself, but an updated photo may be taken early next week. The structure itself hasn't changed all that much; I redid the marker track support so that it no longer interferes with the hopper pulley wheel, and Kimberly attached the structure with the buckets to the superstructure; this made calibrating the marker bricks possible, and also helped stabilize the structure overall (the color sensor enclosure was unbalancing the structure somewhat).

I had gotten a start on the software, but hadn't had a chance to try it out with the actual brick and 'bot. Ron and I worked on getting some of the rudimentary functionality working. We learned the difference between tasks (they run concurrently, and can interfere with each other) and subroutines (which are more what we're used to), and once we got that straightened out, things came together nicely.

We had some problems at first with the counting of the markers; either the 'bot would keep going and stop counting, or it would stop in the wrong place. With some fiddling -- and creative thinking from Ron -- we got some functions working.

So now the 'bot will:

- Start from the soon-to-be-hopper end of the track and move itself into position under the sensor (done via timing, based on an engine power of 5)

- Count markers until it gets to the one it needs, and then stop (sensing isn't happening yet, so we hard-code the bucket number at this point)

- Count markers until it reaches the hopper end of the track again

All in all, very cool! It's just a pity work isn't as interesting!

Meeting 009

Today's meeting was a lot of fun, and we accomplished a lot. Once again we had guests (Doug's beautiful wife Nichelle, and his children: Naomi, David, and Isaac (see last meeting's entry for photos)). The kids seemed to have more fun with our two "crash test dummies" (christened by Isaac Buster and Duster), and didn't do too much on the construction.

It was a good thing we had the kids there, though, because everyone but me and Doug cancelled! We’ve never had such a lightly-attended session! Fortunately, we had a great time anyway and made good progress:

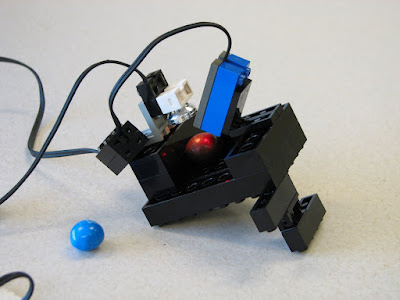

- We enclosed the light sensor and light so that when the sensor tests each candy the ambient light will interfere as little as possible.

- We used inverted roof bricks to prevent a misalignment of the hopper when it moves underneath the sensor assembly.

- I put together a first attempt at the “hopper position determiner” (the strip of contrasting tiles that the tram will use to figure out when it’s over a bucket. This will probably be refined next week, but I was very excited to be a) constructing something on my own, and b) using friction connectors. It’s something that’s undoubtedly trivial to those with expertise, but it was new to me and very cool.

During the ensuing week I did a little bit more; I took readings on the "bin marker" track to see if the colors I used (green for the track, yellow for the markers) gave sufficiently different readings that we could use them. It turns out they do:

| Color | Value in ambient light |

| Yellow (marker) | 680 |

| Green (track) | 763 |

I also made a preliminary attempt at creating the program. I had fun with NQC and BrickCC, and I hope to get a chance prior to the next meeting to see if the code is even remotely close to doing what we want -- or doing anything.

One discovery I made is that the lovely track we constructed last week interferes with the pulley for the hopper -- the middle support is just a teensy bit too high. At our next meeting we'll have to see if we can lower it and still get the support for the track that we need.

Thursday, July 12, 2007

Meeting 008

Today we had some special guests for the latter half of the meeting; Naomi (3.5), David (8), and Isaac (12) Wilcox joined us while Mom hit the Whole Foods store nearby.

We are continuing our process of refining the mechanisms we've settled upon. Today we worked on the tram portion of the robot, first trying a worm-gear-based movement that was ultimately unsuccessful. Laura suggested we return to the "Cinderella's coach" design, thinking that big gears would look good.

That led to a discussion and demonstration of gear ratios and speed versus torque. It was noticed that the tiny gears we'd used for the past couple of weeks were not moving smoothly; their radius is just a bit too tight. David suggested the next largest gears, and I included a smaller pulley than we'd tried before. The combination of the new gears and pulleys gives us a faster tram movement than we'd seen, which should be advantageous, as long as it doesn't go too fast for our sensing mechanisms.

Of course, David wasn't always helpful. He also insisted on testing the tram movement via the remote every time I tried to attach a piece to it.

Isaac dug out one of the large Lego figures that was in our box, and decided to "Busterize" him; so now we have our own version of "Buster," the crash test dummy from the Mythbusters TV program.

We expect to use a color contrasting tile and light sensor to determine which hopper the tram stop at. Movement at the extremities will be limited by stops and governed by the belt drive we are using, so we don't have to be particularly precise for those calibrations.

Today I found myself doing much of the building. I need to figure out a way to increase the Lego building experience of the rest of the team. Laura has done quite a bit, but none of it Technic--which was my own case before MindStorms was released. Several team members are talking about purchasing their own robotics sets, too, but no one has gotten past the "talking about" stage.

Friday, July 6, 2007

Meeting 007

Having spent the past number of weeks hammering out the basic operation and structure our candy-sorting robot would use, it was time to start making that structure look a little more professional.

That essentially meant completely disassembling what we'd cobbled together. We also took the time to study some Technic building techniques, addressing the fear of the team that we didn't have enough "regular" Lego pieces to build what we were doing. (Technic pieces are perhaps less intuitive than "regular" Lego bricks, and only a few of our team members have much experience with Lego, so this was a productive, hands-on time for learning.)

Kimberly's suggestion of "chicken feet" turned out to be very useful in designing a way to raise the platform up to a workable height without adding a lot of unnecessary structure. It's fascinating to see our really, really rough mess starting to look very professional, but that's exactly what one would expect with iterative development.

By the end of next week's meeting, we should have the finalized sensor platform and position-tracking sensor (which will read a series of contrasting-color tiles to determine location) in place, and will then need to decide between starting the color-sorting programming, or coming up with the feeder mechanism. We haven't done any of the programming yet, so that might make the most sense. We shall see what the team decides.

Thursday, June 21, 2007

Meeting 006

With our Fearless Leader off in the Wilds of Florida this week, we were concerned that we might not be able to make much in the way of progress. We need not, however, have been concerned! Our numbers were sorely depleted -- it was just me, Irena, and Birt. Nevertheless, we accomplished much more than anyone could have expected.

With the extra rack parts our F. L. sent us, we extended the track that the sorter will travel on. We put together a prototype bin to hold the sorted candies, but we encountered a major problem -- we don't have enough of the regular Lego pieces! We must address this problem with our F.L. when he returns.

Birt had to depart a bit early, so for the last fifteen minutes it was just me and Irena. We built a support for the sensor array and connected it to the track. We were even able to test it with the sorter and it was great -- it moves under the sensor array with almost no snagging. We will have to program carefully to ensure that the sorter is exactly horizontal -- or else we'll have to use a 1/4 height separator to raise up one or both ends of the sensor array support, but that would allow ambient light in, which might skew our readings too much.

Thursday, June 14, 2007

Meeting 005

Last week we tried to work as 2 teams with not so familiar ones ending in a single group and building a concept - rotating base - that successfully crashed at the end. This week we decided to work as a single team and see that the sorter prototype is taking shape and form of a working mechanism.

The idea to test was to slide the sorter back and forth and drop the M&M at appropriate position. I wish there would be photos to share: at the end of the meeting the chamber was moving smoothly and experimental drop of the M&M was accepted by a round of applause.

Next week we can try to use the same light sensor for positioning by scanning gray and yellow colored bricks (another picture could be placed here). Doug, our Lego Expert, will not be there with us. Happy Vacation Doug!

Thursday, June 7, 2007

Thursday, May 31, 2007

Meeting 003

In our third meeting, we finally started to assemble the prototype candy sorter. This project is going to take a while, so the week-to-week progress seems small ...

Still, we are having a great time working together, and learning how tricky going from concept to implementation can be.

We've decided to use a rotating "bucket" that will accept a single piece of candy, rotate underneath the a "scanner," and rotate back to a particular hopper where the candy piece will be dumped.

I spent part of this meeting trying to figure out why my rotation sensor wasn't returning any values, and ultimately discovered that, for reasons I still can't explain, it simply wouldn't work on one of the three input channels available on the RCX we were using. We expect the rotation/angle sensor to make our task much easier, as it seems to be quite accurate.

The image above is actually from a video you can view, if you would like a better idea of how we envision the candy sorter working.

Above: Part of our group: Irena S., Kimberly P., "LegoDoug," "Birt," and Peter P.

Thursday, May 24, 2007

Meeting 002

In the second meeting, we got started with the project itself.

Our first task was to determine, having chosen to build a candy-sorting machine, whether we could actually sort candies reliably based on color. Our answer would come with an investigation of the light sensor and how it read colors under various conditions. I suppose we could think of this as the basic research phase, as opposed to applied research, which one doesn't normally think about when doing a robotics project.

We fired up the Bricx Command Center, which offers some great remote-control options for the prototyping and testing stage, as well as very decent NQC development environment. After some initial stumbling (it has been a long time since I've used this), we were able to set the sensor types and get data back in its various formats.

Above: Ron, Irina, and Laura work on testing the M&Ms in our test apparatus.

We chose M&Ms as our candy to sort primarily because the color consistency is carefully quality-controlled, so we knew even if we ate them all, we'd be able to get more in the same color. In addition, we rejected jelly beans because of variation in color, especially among multiple brands. This particular M&M type is also "mostly round" in shape, which should help with the color identification process, at least in theory. We surmised, additionally, that ratio of logo size to overall surface area on plain M&Ms might produce too much deviation in color readings, although we did not positively determine if that was the case.

Discovery: The two different light sensors we have, seen in the test candy holder above, do not produce the same readings. There is enough variation to throw off any programmed color detection. We will need to account for this when we actually get down to writing code. (This is why it's good programming practice to define constants to allow for value tweaking.)

We talked about how ambient light as well as supplied light might greatly affect the readings, and discovered that was completely correct.

We mounted the Lego light and the Lego light sensor tangent to the candy, and checked our readings with and without the light. This turned out to be important, because the readings we got for yellow and orange were very similar, but by adding and toggling the external light source, we can easily differentiate the colors under those conditions.

Because ambient light levels so dramatically affected the readings we got, we put the whole apparatus under a large box to get our final readings. We will have to enclose or shade the test chamber in our final product.

Our final test bench, above, used a pocket set at an angle to keep the M&M in the proper place.

The readings, done under a box, gave us the following results using "raw" sensor data:

| Color | Value | Value with Added Light |

| Orange | 725 | 565 |

| Red | 735 | 558 |

| Yellow | 721 | 617 |

| Blue | 819 | 571 |

| Green | 779 | 565 |

| Brown | 785 | 564 |

Notice that the values for orange and yellow are very close, but that if we get into that range, we can programmatically activate the light to differentiate them. Otherwise, we shouldn't need to activate the second light.

Next week we will begin prototyping the mechanism, our first bit of real Lego assembly. I am eager to see how it goes.

Thursday, May 17, 2007

Meeting 001

Although I envisioned doing this long ago, we finally started a group at work doing robotics using the Lego MindStorms Robotic Invention System.

Our first meeting was spent providing an introduction to the Lego MindStorms system, demonstrating the various sensors and motors, and how the RCX worked. We talked about project ideas, and chose a candy sorter as our first project.

The last thing we did in meeting one was kick around some very basic design ideas for the candy sorter. In doing so, we learned that we had initial conceptual views which varied greatly from one another. This will definitely be an advantage in problem-solving, and it will be fascinating to see how we narrow down our designs to one type, and then refine them.

In this case, although we had some other variations, the two predominant design concepts were a hopper-fed mechanism and a robot that would navigate within a defined area and retrieve candy scattered there. Both seemed valid initially, although we elected a hopper-fed design due to problems we foresaw with a gathering-type robot pushing candies outside of the gathering area.